Azure bites

-

@BernieTheBernie said in Azure bites:

Next fun.

I just wrote a fresh Azure Function Äpp with .Net 8 LTS. It references a project targeted to .Net 4.8. The latter makes use of theTextRendererclass which is defined inSystem.Windows.Forms.

You guess it.[Error] System.IO.FileNotFoundException: Could not load file or assembly 'System.Windows.Forms, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089'. The system cannot find the file specified.

File name: 'System.Windows.Forms, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089'

at FarSide.TextHelper.DistributeText(String text, Font font, Int32 maxWidth)

at ...

There's a reason you get a big scary warning when you try to add a package/project reference to something that targets an earlier version of .NET than the consuming package does.

Or, shorter: You ==

-

@izzion said in Azure bites:

you get a big scary warning

No, that big scary warning does not happen at all.

-

@BernieTheBernie That reminds me a bit of errors I'd sometimes get when working with OSGi (because two classes differed in coordinates not normally shown to the user).

-

@BernieTheBernie said in Azure bites:

.Net 8 LTS. It references a project targeted to .Net 4.8

Nothing specific to Azure here. .нет 4.8 is .нет framework, while .нет 8.0 is .нет core. .нет core dropped a bunch of components that were windows-specific for sake of making it portable to other platforms. So yeah, нет gonna work.

Microsoft really messed up .нет versioning—and backward compatibility. Though they kinda cornered themselves by making

System.Windowspart of the standard library in the first place.Also by implementing it new in .нет 8, you are already using the

dotnet-isolatedruntime (supported from .нет 6) and evaded the porting from thedotnetruntime (supported up to .нет 6). That upgrade basically means huge search&replace of everything, because the annotations and the injected types are in different packages and most of them called differently. You also need to add the entry point (fordotnetruntime you uploaded a.dll, now you upload an.exe).The bindings make Function Äpps slightly faster to write than writing a normal ASP.NET web app, but after seeing it a couple of times I'd choose the web app—the Äpp Service is similar to the Function Äpps, and runs on the same Service Plan, but because it runs any HTTP service, it is not tied to a specific SDK (you can even deploy it as a container if the runtimes they provide don't suite you).

-

@Bulb Another idea is to write that Functiön App in .Net 4.8 (yes, that old version is still supported). I need a "Timmer Trigger", so I guess Asp.Net won't help here.

-

@BernieTheBernie said in Azure bites:

I need a "Timmer Trigger", so I guess Asp.Net won't help here.

Actually we have a service in the internal app that does—actually, used to do—just that. It is written in asp.net and executed as a container workload. The timer didn't want to start automatically, but it does when any request is made against the service, so we used the standard kubernetes support for health-checks to make sure it starts.

The service was since modified so that there is a Logic Äpp (that's the “codeless” kind you just assemble in the portal from components) set to run on schedule that just triggers the http endpoint of the service.

-

@Bulb And now print the directory listing, put the paper onto a wooden table, take a polaroid photograph, scan it, ...

-

-

@BernieTheBernie said in Azure bites:

@Bulb And now print the directory listing, put the paper onto a wooden table, take a polaroid photograph, scan it, ...

I had some reason to think internally the function äpp service also implements the timer triggers as http triggers called from some scheduler service.

-

@Bulb Very likely. The app gets started and stopped every now and then, and at specific moments in time the function actually runs. And on the "Test and Run" page of the Azure Portal, an http request is sent to the function äpp when testing...

-

Anyways, guess what the next fuck-up is...

Font.

Yeah, those sandboxed serverless thingies have fückêdüp fonts. You cannot create aBitmapwith a text in it.

Great. Serverless is great.

-

@BernieTheBernie You don't need that

Use a letter CDN instead!

Use a letter CDN instead!

-

@BernieTheBernie said in Azure bites:

Great. Serverless is great.

That's why I recommend doing a web app, bundling it in a (docker) container and running that (in Azure you can run it in Äpp Service, Container Äpp or Kubernetes (AKS)). Because that way you have full control over the environment, and can test it locally and it's exactly what's going to run. Plus you can take it to a different cloud when you eventually get pissed enough by Microsoft.

-

@Bulb Also A Function Äpp can use a container (have never done so). Anyways, this is just for playing around, and collecting some experience.

And the experience is ... um ... you know the title of this web site...

-

Eventually I was f*** fed up with the great performance of my Azure Win 11 machine, so I decided to setup a Win 10 machine. Selected the

win10-21h2-entn-ltsc-g2image, and a virtual SSD. Afterwards, I had to install whatever I needed, Visual Studio, Sql Server Management Studio, Notepad++, VisualSVN Server, Tortoise SVN, Firefox, and configure those applications.

Anyway, rememberInfrastructure as Code? Of course, for a one-time setup, I did not create any scripts, ... so this second setup was a completely manual setup again.

Of course, for a one-time setup, I did not create any scripts, ... so this second setup was a completely manual setup again.

Upto now the machine seems to performbetterless worse than the Win 11 machine - that is a very low bar, actually a bar buried in the ground. Let's wait and see...

-

@BernieTheBernie “Infrastructure-as-Code” works with things that are at least somewhat designed for it, which is very much not the case of traditional operating systems, and especially of Windows. Linux at least had package managers for over 30 years and recent-ish-ly got the cloud-init for specifying what to install and configure on first start (the documentation is a bit thick, but once you sort out how the snippets fit together, it works reasonably well). But Windows have … complicated tools involving Active Directory and Group Policies.

Or, on Azure, you can provide a powershell script to run on first boot of the VM. Which behaves a bit weird—notably

Invoke-WebRequestfails without the-UseBasicParsingoption because it runs before the Infernet Exploder libraries are initialized or something—and is total pain in the arse to debug.… at least Chocolatey has been a thing for a while for installing software on Windows, but I am not sure it would run from the startup script due to the abovementioned quirk.

-

-

-

With my old big computer now being relatively fast due to the fresh SSD, I installed Win 10 Pro there in a VirtualBox machine. Downloading all the programs took very long - my internet connection at home makes some 17 MBit/s, so that's to be expected. The machine runs better than the cloudy Win 11 HDD machine -low bar. But the azure Win 10 SSD runs smoother.

And from the VirtualBox machine, I cannot access my cloudy serverless SqlServer because of its network security configuration (could change that, but it would also cost an IP address).Hm.

What are the prices?

Both azure machines cost 11 (euro-) cents (plus 19% VAT) per day for the IP address (to be paid also when deallocated), and also have the same price tag for actually running the machine (B2ms2 vCPU 8 GB at 10 cents,B4ms4 vCPU 16 GB at 20 cents per hour - nothing when deallocated).

The hard disk price (to be paid also when deallocated) is 18 cents per day for the HDD, and 61 cents per day for SSD (both 127 GB - a smaller disk is not available). For , it is not possible to replace the disk of a machine, you have to create a fresh machine instead (otherwise I could have it stored as an HDD while deallocated, and run it with an SSD...)

, it is not possible to replace the disk of a machine, you have to create a fresh machine instead (otherwise I could have it stored as an HDD while deallocated, and run it with an SSD...)

I.e. just for having the machine, it's €126 vs. €313 per year incl. VAT.Hm.

What about buying a "good" machine? That would cost around 4 years of having the SDD machine, but that would have a bigger local hard drive, more CPU, I'd also purchase more RAM, and there would be virtually zero costs for actually using the machine. Overall, break even reached in 3 or less years.

But I could not simply upgrade to more CPU / memory as I could do with azure.

-

@BernieTheBernie said in Azure bites:

And from the VirtualBox machine, I cannot access my cloudy serverless SqlServer because of its network security configuration (could change that, but it would also cost an IP address).

Why would it cost an IP address? The cloudy serverless sql server can be open from internet without buying a separate IP address. And if you enable AD/Entra authentication only, I wouldn't even worry about no or loose firewall much.

Why would it cost an IP address? The cloudy serverless sql server can be open from internet without buying a separate IP address. And if you enable AD/Entra authentication only, I wouldn't even worry about no or loose firewall much.

-

@BernieTheBernie said in Azure bites:

18 cents per day for the HDD, and 61 cents per day for SSD (both 127 GB - a smaller disk is not available)

Where did you get those prices? The calculator shows me the prices per month only, and shows Standard SSD as only twice as expensive as Standard HDD, not more than three times (€5.44/M + €0.0005/10kiops for 128 GiB HDD and €8.87/M + €0.0018/10kiops for 128 GiB SDD) — the former matches, the later does not.

-

@Bulb Interesting idea, I might try it somewhen. Depends on

's preferences.

's preferences.

But I see a catch: my home IP address is dynamic, and I get a fresh address every night from the vast range of t-online ip addresses. That would mean that I'd have to open the firewall for every address or do a lot of rules.

-

@Bulb said in Azure bites:

@BernieTheBernie said in Azure bites:

18 cents per day for the HDD, and 61 cents per day for SSD (both 127 GB - a smaller disk is not available)

Where did you get those prices? The calculator shows me the prices per month only, and shows Standard SSD as only twice as expensive as Standard HDD, not more than three times (€5.44/M + €0.0005/10kiops for 128 GiB HDD and €8.87/M + €0.0018/10kiops for 128 GiB SDD) — the former matches, the later does not.

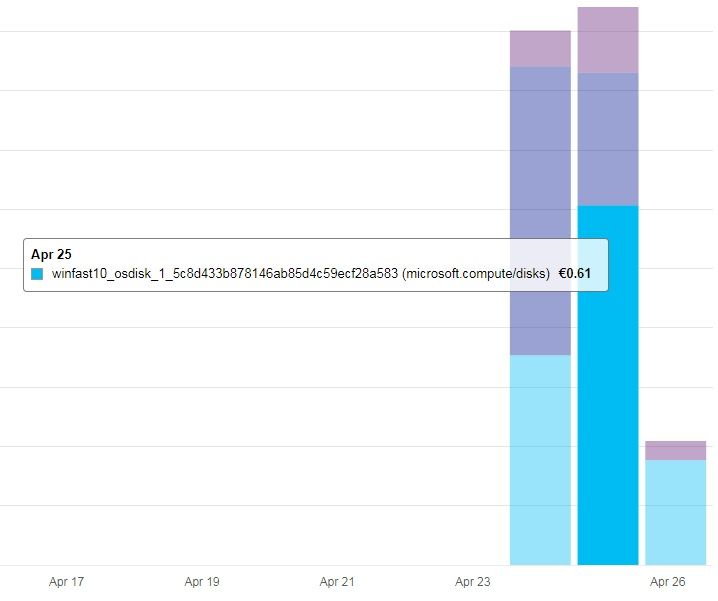

From the actual cost analysis. I drilled it down, and it shows me that Microsoft billed me for the SSD yesterday 61 cents:

It is a

Storage type: Premium SSD LRS - Size (GiB): 127 - Max IOPS: 500 - Max throughput (MBps): 100 - Encryption: SSE with PMK.

-

One moment... The disk can be changed later on. But it's complicated - not complicated per se, just complicated to find the hidden place. You have to select the disk (that long name shown in the screenshot above), and there you find "Size + performance" under "Settings". And now you can change it (but reducing the size is prevented). Just changed the performance tier from

P10toE10, i.e. "Standard SSD".

Startup seemed a little bit slower, also starting VS, and then starting a razor äpp in debug mode. But worx.

As for the actual prize, I'll learn .

.

-

@BernieTheBernie said in Azure bites:

@Bulb Interesting idea, I might try it somewhen. Depends on

's preferences.

's preferences.

But I see a catch: my home IP address is dynamic, and I get a fresh address every night from the vast range of t-online ip addresses. That would mean that I'd have to open the firewall for every address or do a lot of rules.Since it's “serverless”, you are sharing the server with a lot of people that access it from all over the place, so

- protection against DDoS is Microsoft's problem, and they already have some in place,

- so is addressing the server vulnerabilities, and

- you are only concerned with someone guessing your credentials.

The last point I'd address by using the Entra authentication. In Azure, you create a security group, set it as admin and disable non-entra login.

In the .нет library you replace the credentials with

Authentication=Active Directory Default[1] in the connection string and it will pick the login from anywhere it can—environment, managed identity, visual studio oraz, so it basically just works. And the management studio supports the interactive login, so that works too. Only if you also need thesqlcmdtool, you need magic incantation:token_file=$(mktemp) az account get-access-token --resource https://database.windows.net --query accessToken --output tsv | tr -d '\r\n' | iconv -f ascii -t utf-16le > "$token_file" sqlcmd -G -P "$token_file" …# the other options…(for Linux shell or Git Bash; I don't know powershell well enough to remember how to get the token in UTF-16 off the top of my head)

And then I wouldn't worry about just setting the firewall to the whole range or even just leaving it open altogether.

-

You are complaining often about Delivery Distortion Fields. I found the reason for those distortions: your parcel delivery companies are cloud

operated.

operated.

And then, the DDF can also occur with emails.

I have Microsoft send me a daily cost report, to be sent in the morning. I did not receive one yesterday - it did not land in the spam folder either. But this morning, it arrived as usual. Everything OK again?

Around lunchtime, yesterday's cost report arrived.Headers from "Daily costs update for May 4, 2024":

Date: Sat, 04 May 2024 07:02:48 +0000

Received: from mail-nam-cu03-sn.southcentralus.cloudapp.azure.com ([20.97.34.220]) by redacted with ESMTPS (Nemesis) id redacted; Sat, 04 May 2024 09:02:50 +0200

It contains a chart with filename "Daily costs_2024-05-04-0515.png", showing costs upto the early morning of 05 May.Headers from "Daily costs update for May 3, 2024":

Date: Sat, 04 May 2024 10:04:02 +0000

Received: from mail-nam-cu03-sn.southcentralus.cloudapp.azure.com ([20.97.34.220]) by redacted with ESMTPS (Nemesis) id redacted; Sat, 04 May 2024 12:04:05 +0200

It contains a chart with filename "Daily costs_2024-05-03-0515.png", showing costs upto the late morning of 05 May.You see: the order of messages is not guaranteed. That's eventual consistency at its finest.

-

@BernieTheBernie said in Azure bites:

You see: the order of messages is not guaranteed.

Never has been with email. Even delivery isn't really guaranteed because of the layers in the middle. It feels more than ever like delivery is a miracle.

-

@Arantor said in Azure bites:

feels more than ever like delivery is a miracle.

I'd amend that to "anything in software working at all" being the miracle.

-

@Arantor said in Azure bites:

It feels more than ever like delivery is a miracle.

You must not have enough

s to remember the Good Old Days before DNS. Instead of

s to remember the Good Old Days before DNS. Instead of username@domain, email addresses were of the formserver1!server2!server3!username. If any of those servers happened to be down when the previous server tried to forward the mail through them, your mail just silently disappeared into a black hole, never to be seen again. There was no rerouting around a failed server; if the exact path you specified wasn't available, no mail for you.

-

@BernieTheBernie The date field traditionally shown by email clients is set by the initial sender; if the date of the 3 May report is indeed 4 May 10:04, then it was microsoft who sent it late. If the date on the initial email is older, you can open up the raw email and check the dates on the received headers (note those timestamps are human-readable and will likely not be in a consistent timezone).

-

@HardwareGeek said in Azure bites:

@Arantor said in Azure bites:

It feels more than ever like delivery is a miracle.

You must not have enough

s to remember the Good Old Days before DNS. Instead of

s to remember the Good Old Days before DNS. Instead of username@domain, email addresses were of the formserver1!server2!server3!username. If any of those servers happened to be down when the previous server tried to forward the mail through them, your mail just silently disappeared into a black hole, never to be seen again. There was no rerouting around a failed server; if the exact path you specified wasn't available, no mail for you.I am indeed not that old, but at least in that model delivery having the not-so-occasional brain-fart feels more like it would be grudgingly tolerated rather than entirely

-

@PleegWat said in Azure bites:

@BernieTheBernie The date field traditionally shown by email clients is set by the initial sender; if the date of the 3 May report is indeed 4 May 10:04, then it was microsoft who sent it late. If the date on the initial email is older, you can open up the raw email and check the dates on the received headers (note those timestamps are human-readable and will likely not be in a consistent timezone).

Too many email clients won't show you the full headers, or at least hide that option away. The

Received:chain is interesting occasionally, and one of the best ways of detecting malicious messages. (Well, excluding the ones genuinely from HR.)

-

@PleegWat said in Azure bites:

then it was microsoft who sent it late

Exactly my point: not only is the order of

deliveryof emails not guranteed by the email system as pointed out by , nor is the order of

, nor is the order of generationandsendingof emails by Microsoft Azure guaranteed.

guaranteed.

-

@BernieTheBernie Well, at least you're not using Microsoft 364 directly, just Azure.

-

@Arantor

E_OFF_BY_ONE- intended?

-

@BernieTheBernie Microsoft 364 — it works every day, except today.

-

@HardwareGeek 2024 is a leap year, so 365 is of by one anyways.

-

@BernieTheBernie said in Azure bites:

@Arantor

E_OFF_BY_ONE- intended?The 365 is an aspirational amount of uptime.

Pretty sure we're already on 364 for this year assuming no further outages.

360 is the record as far as I'm aware.